date. 2022 August- 2023 May

city. Boulder, Colorado

team. Alexander Chan, Areeb Rohilla, Levi Eirinberg

Software used: Figma, Processing, P5.js, Notion, Javascript, Adobe Photoshop, Adobe Illustrator, Adobe XD, Adobe After Effects, Printful, Deezer, Webflow

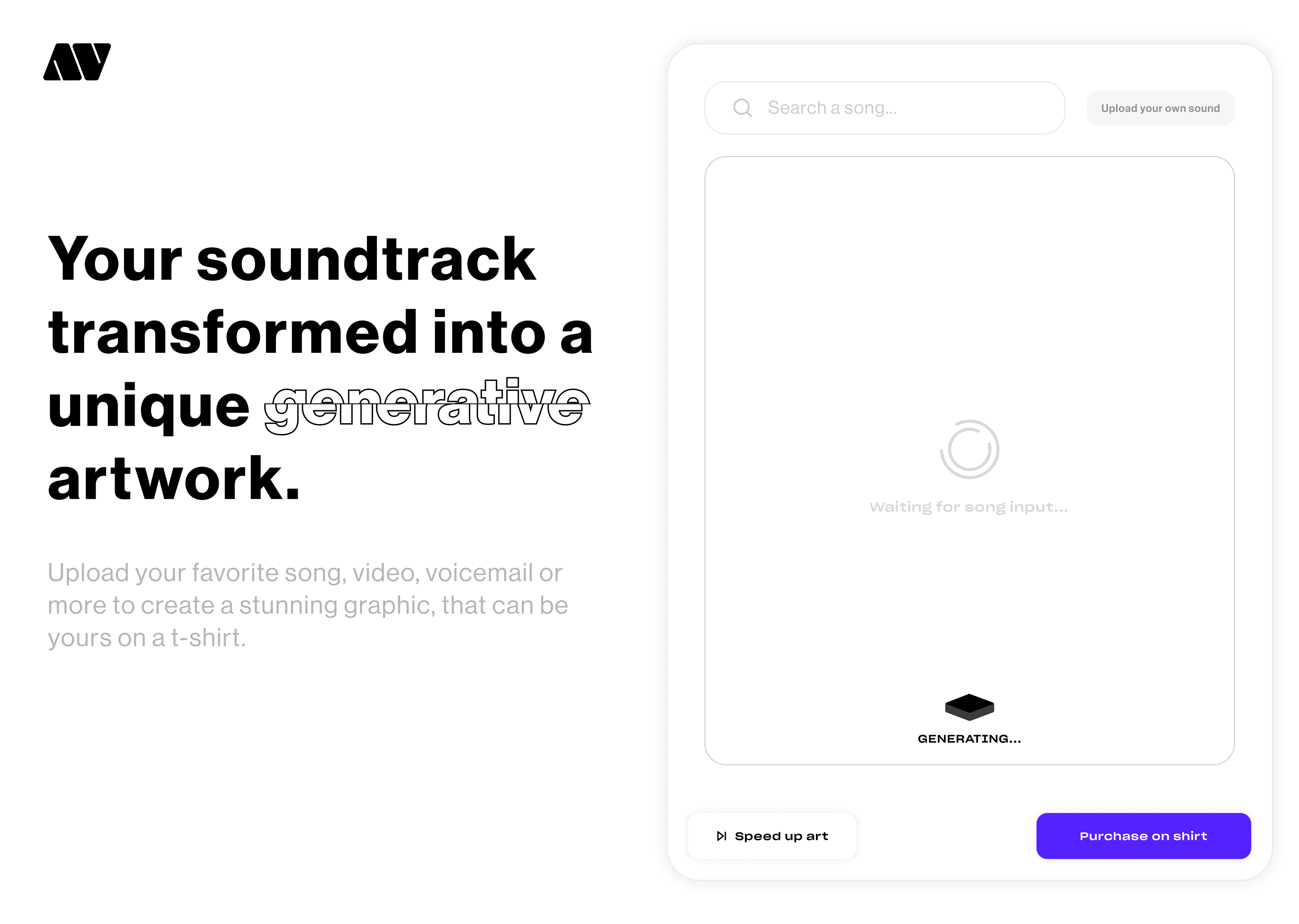

We’re creating a web application that transforms audio data to wearable art

Abstract visions purpose is to allow the user to pick a song of choice which generates a piece of art based off the frequency, amplitude and bass of the song. This data is then read and creates a form of generative art. Once this art is fully created it, the user can then order the art piece in a form of a t-shirt.

Every song is only 30 seconds long, which is the most famous parts of the song or most played.

Logo branding designs

Logomark and logotype on inside shirt

Logo type

Logomark on hem tag

Alternative Logomark

Main logomark

Different logo variants

Prototype Testing

Generative Art Based Off Of Sound Input

This code demonstration below is using processing to code the sound of a users microphone to produce these lines that look like scribbles with blotches of black. So the user can visualize the sound with the art.

This code is using processing to take in the sound of a users microphone to produce these lines vary on the frequency as well with a different set of colors going left to right.

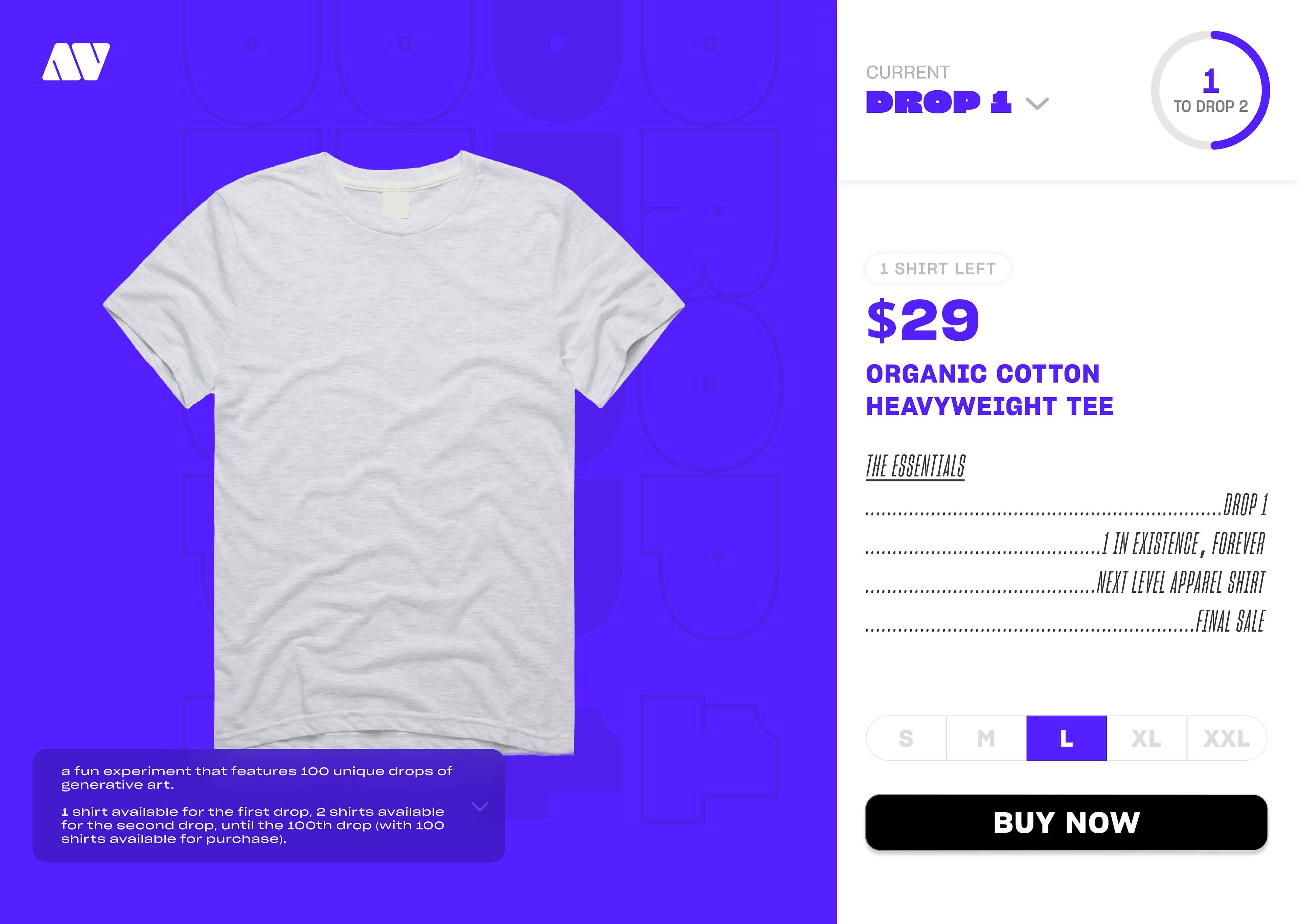

Variations of designs for t-shirts

Various Figma mockups

Process of our work

For full documentation and process feel free to contact me for permissions

12 Weeks of full Documentation